Policy rules

Monetary economists like rules. Traditionally, they worry that policymakers will sacrifice the long-term benefits of price stability for the more immediate gratification of higher growth. Realizing how hard it is to resist temptation, politicians have delegated monetary policy to a central bank that is independent, but subject to a mandate that constrains their discretion. This institutional setup helped lower inflation in the advanced economies from a median exceeding 10 percent in the late 1970s and early 1980s to about 2 percent by the late 1990s.

But, convinced that overly accommodative financial conditions in the first few years of the century spurred the credit accumulation that fed the 2007-09 financial crisis, there is a push to constrain central banks further by requiring that they publish and account for their actions with reference to a simple policy rule. John Taylor has been the strongest proponent of this view, arguing that “predictable rules-based monetary policy is essential for good economic performance.”

Republican support for the Federal Reserve Oversight and Modernization (FORM) Act is raising the prominence of this issue. If it were to become law, the FORM Act would require the FOMC to develop a policy rule and measure it relative to a traditional Taylor Rule (see, for example, page 45 of A Better Way: The Economy).

The arguments for a rule of this type are similar to those that were used to support inflation targeting. By restricting decisions, it is less likely that there will big mistakes. And, by requiring policymakers to explain their actions with reference to a rule, it improves both the focus of internal discussions and the nature of external accountability.

But should we further constrain monetary policymakers in this way? Is it a good idea to move from the current system where policymakers’ ultimate target is dictated to them by elected officials to a system where the focus is on legislative monitoring of their operational instrument?

We are skeptical for a variety of reasons. First, achieving their mandated objective may require policymakers to deviate from a simple rule. For example, if the rule is based on current or past conditions (as is the simple Taylor rule), a desire to appear to be adhering to the rule—which may be viewed as politically safe—could delay or impede desirable policy shifts when developments alter future prospects. The list of such developments includes changes in financial conditions, shifts in government economic policies, domestic and international political disturbances, and even data quality and measurement problems.

Regardless of what the rule dictates, it also seems natural for policymakers to take account of low-probability, yet extremely adverse possible outcomes. The 2007-2009 financial crisis provides a stark example of a case when a simple interest rate rule would have proved to be insufficient. More generally, disturbances that raise the probability of hitting the effective lower bound (ELB) on nominal interest rates merit an aggressive response in part because conventional interest rate policy (as prescribed by most simple policy rules) becomes unavailable at the ELB. Other such tail risks that central bankers have used interest-rate policy to address include the possibility of falling into deflation or even the less catastrophic, but potentially costly, shift of long-term inflation expectations away from the target.

Turning to the details of our concerns, let’s examine the FORM Act’s reference policy rule against which the FOMC is to compare the (audited and published) directive policy rule on which it is to base its decisions. This reference rule is the original Taylor Rule, which we write as follows:

Policy Rate = 2 + Inflation + ½ (Inflation – 2) + ½ (Real output – Potential output).

That is, the rule says that the policy rate should be set equal to 2 percent plus the current annual inflation rate plus one-half times the gap between current inflation and a target of 2 percent, plus one-half times the gap between current and potential real output.

Using the personal consumption expenditure (PCE) price index favored by the FOMC and the current Congressional Budget Office estimate of potential GDP, we can compute the reference rule rate and compare it to the federal funds rate that was set. The picture looks like this:

Looking at the chart, from the early 1980s to the mid-2000s the policy rate (in red) tracks the rule-implied rate (in blue) relatively closely (the correlation is 0.79). But there are some significant deviations. Note that rates were persistently higher than implied by the rule in the second half of the 1990s and lower in the aftermath of the 2000 recession.

Would it have been a prudent for the Fed to have adhered more closely to the original Taylor rule over the past 20 years? It is impossible to know exactly what would have happened if they had, but we doubt things would have turned out much better than they did and they very well may have been worse. The first reason is that, as it is constructed, the rule ignores a range of factors that influence inflation, growth and employment. These include an array of financial conditions, such as the value and volatility of equity markets; the behavior of bond markets (especially the spread between investment-grade corporate bonds and Treasurys); the willingness of banks to lend, as measured by survey responses; and idiosyncratic movements in bank stock prices. (For a list of policy-relevant financial conditions, see Figure 4.2, page 46, here.)

For example, did it make sense for policymakers to respond to big financial shifts—the October 1987 equity market crash, the late 1990s tech boom and bust, the March 2008 failure of Bear Stearns and the September 2008 collapse of Lehman Brothers—insofar as they altered prospects for inflation and output? Taking the 2008 episode as an example, note that from October 8 to December 16 the FOMC slashed the target federal funds rate from 2 percent to the 0 to 0.25 percent range. Considering the subsequent depth of the downturn, few have challenged this aggressive response, but it was not the result of adherence to the Taylor rule, which would have delayed such action at least until data became available in the first quarter of 2009.

The second reason is the effective lower bound (see, for example, the analysis of San Francisco Fed President John Williams). Here the concern is that, since there is a lower limit below which policymakers cannot push interest rates, there will be times when they should ease by more than the rule implies in order to avoid being compelled to use less predictable (and politically more controversial) unconventional policy tools for stabilizing the economy. The cost of temporarily tolerating a modest inflation overshoot may be small compared to the potential damage to the economy when policy is stuck at the ELB.

Other concerns arise if, as the FORM Act stipulates, the FOMC is required to use the original Taylor rule as its benchmark. First, both actual GDP and potential GDP are subject to large revisions, so that real-time observations can be misleading for policy. For example, the current estimate of end-2016 potential GDP is fully 10 percent below the projection at the beginning of 2008. Such practical data problems have led many observers of the business cycle to shift from using GDP-based policy rules to ones based on the unemployment rate, which is subject to fewer, and far smaller, revisions. Estimates of the natural rate of unemployment do move, but not by nearly as much.

Similarly, how should one measure inflation? While many central banks focus on consumer price indices, the FOMC has chosen to focus on the PCE. But what version of the PCE? Should they use headline or core? And if they choose core, do they drop food and energy, or do they go to a statistical indicator of the trend, like a median or trimmed mean (see here)?

Perhaps the largest concern regarding measurement is the challenge of estimating the “steady-state” (or “natural”) real interest rate. In the original Taylor rule, this is set to two (2). But, there is substantial evidence that the number has fallen substantially, and could now be as low as 0.5 percent (see here).

Finally, there is the question of how responsive the FOMC should be to inflation gaps and to output or unemployment gaps. Former Fed Chair Ben Bernanke argues that this second coefficient should be 1 rather than ½. Using this value suggests both that pre-crisis policy was close to that implied by the rule, and that policy since 2009 has not been overly accommodative.

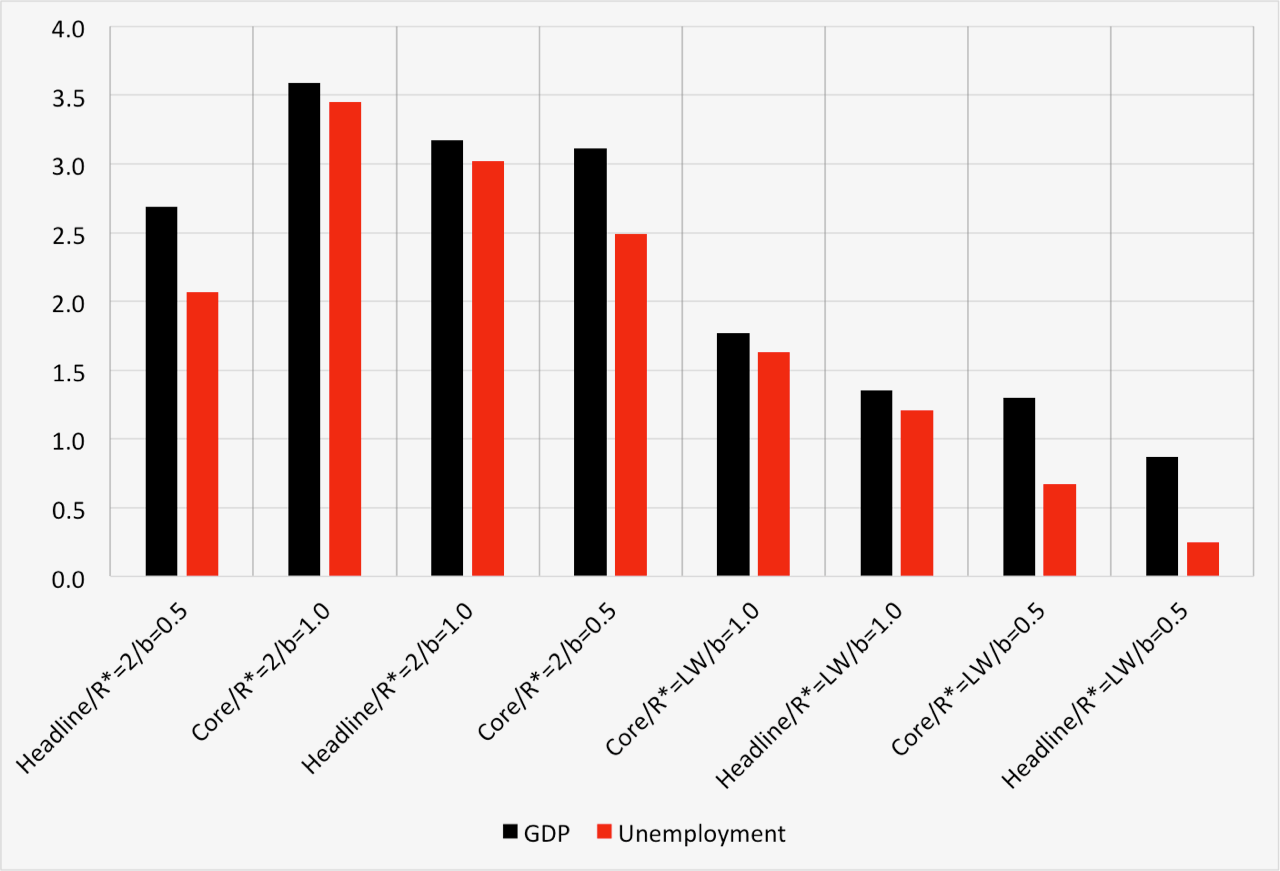

What this all means is that if you tell a group of serious, well-informed people that they should set policy interest rates based on a simple rule, they could very well come up with quite different numbers, limiting the information value of a comparison with the original Taylor rule. To give a sense of how different the answers might be, we used the Federal Reserve Bank of Atlanta’s new Taylor rule utility and computed the implied level of the policy rate under a range of different assumptions. We adjust four quantities: we allow the output gap to be measured by either GDP or unemployment (for the unemployment rate, the coefficient is double that for GDP); we let inflation be measured by either headline or core PCE excluding food and energy; we include cases where the natural real interest rate (R*) either equals 2 (as in the FORM Act) or follows the estimates of Laubach and Williams; and finally, we allow the coefficient on the output gap to be either ½ or 1.

The following chart displays the results of this exercise. For current data, the policy rule estimates range from 0.25 percent to 3.59 percent! (The FORM Act’s reference rule using headline PCE inflation on the far left of the chart implies a policy rate of 2.69 percent.) Some changes clearly make a bigger difference than others. For example, moving from headline to core inflation raises the rule-implied rate by less than half a percentage point. By comparison, changing the estimate of the current natural rate of interest has an enormous impact, lowering the reference rule rate by more than 1.8 percentage points. Our point is that all of these estimates are defensible and legitimate. Consequently, having the legislature monitor deviations from one or the other rule seems of little value, but could unduly influence FOMC operations.

Current interest rates implied by versions of the Taylor rule, November 2016

Source: FRB Atlanta, Taylor rule utility.

To be sure, the FORM Act includes the following escape clause:

Nothing in this Act shall be construed to require that the plans with respect to the systematic quantitative adjustment of the Policy Instrument Target … be implemented if the Federal Open Market Committee determines that such plans cannot or should not be achieved due to changing market conditions. Section 2C(e)(1).

In other words, the Act allows the FOMC to state that, because circumstances have changed, macroeconomic and financial stability objectives are better served by deviating from the published (and GAO audited) policy rule. That is, so long as they explain themselves, policymakers can deviate from the rule.

Yet, by virtue of selecting a specific operational benchmark, the FORM Act will alter both private and public discussions, and could very well change interest rate setting itself. The point of the Act is to put pressure on the FOMC to limit deviations from a reference rule. This constraint could easily lead policy to be tighter or looser than conditions warrant.

In conclusion, we share the view that monetary policy should be predictable. We believe that policymakers should have in mind a reaction function consistent with their legal mandate. This means knowing how they expect to change their interest rate target as financial and economic conditions evolve. To the extent feasible, they should share that reaction function with the public. Such transparency would help speed financial and economic adjustment to unforeseen shocks, reducing volatility. Nevertheless, we doubt that requiring operational accountability with reference to a simple policy rule will improve performance.